Week 6

Posted: 16 October 2011 Filed under: project 52 | Tags: processing, project52 Leave a commentThis week’s visualisation is using the Gatwick news feed (http://www.gatwickairport.com/rss/) and producing a radar screen effect using the number of times the word “delay” appears.

Week 5

Posted: 9 October 2011 Filed under: project 52 | Tags: processing, project52 Leave a commentSo I’m returning back to the weather for this week’s project and using the speed of the wind as reported via the Arch-OS feed (http://www.arch-os.com/livedata/). The value of the windspeed sensor controls the speed with which the rectangle revolves, much like a virtual wind vane.

Week 4

Posted: 2 October 2011 Filed under: project 52 | Tags: processing, project52 Leave a commentSo this week’s makes use of the Radio 1 Mini Mix podcast (http://downloads.bbc.co.uk/podcasts/radio1/r1mix/rss.xml). It takes the first track and uses the beat to draw circles or squares. The colours and level of transparency are also determined by the beat. It takes a while to load so please be patient – you might also want to turn the volume down as it also plays the track 🙂

Week 3

Posted: 25 September 2011 Filed under: project 52 | Tags: processing, project52 Leave a commentThis week’s visualisation uses the poetry of Polly Lovell (http://pollylovellpoems.wordpress.com/feed/). A word is randomly selected from the first poem and in turn is interpreted using 10 photos from flickr. When viewed, try moving the mouse over the images.

Week 2

Posted: 18 September 2011 Filed under: project 52 | Tags: processing, project52 Leave a commentThis week’s visualisation uses NASA’s image of the day (www.nasa.gov/rss/image_of_the_day.rss). It has 12 instances of the image all rotating on the same point to give the impression of a star.

Week 1

Posted: 11 September 2011 Filed under: project 52 | Tags: processing, project52 Leave a commentAfter much consideration I decided to make the first visualisation of our national obsession – the weather. Using the BBC weather feed for Plymouth (http://newsrss.bbc.co.uk/weather/forecast/0013/Next3DaysRSS.xml), I’m taking the most up-to-date values to feed the sketch. Unfortunately during my testing I noticed the feed isn’t updated as often as I would have liked so it could be displaying the sun when it’s actually tipping down!

Ecoids

Posted: 25 June 2009 Filed under: Ramblings | Tags: ec-os, ecoid, processing, xbee Leave a commentI’ve just been on a workshop to build ecoids using XBee. An ecoid collects eco-data such as humidity, light and temperature and using an XBee transmits the values to a server which then in turns loads them into a database for publishing at a later date. Photos of the workshop can be found here.

Midi update

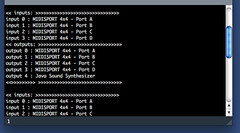

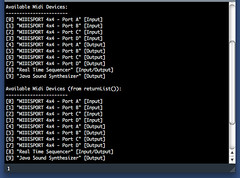

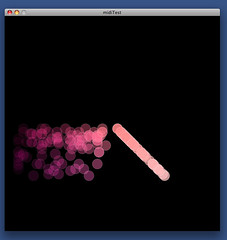

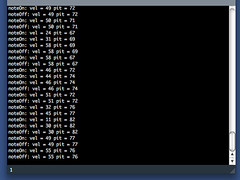

Posted: 22 March 2009 Filed under: Masters, Negotiated Practice B | Tags: Masters, midi, processing Leave a commentI’ve been a bit lapse in charting my progress in this……mainly coz my progress has been pretty slow in my opinion. Anyways, I’ve been working on doing the visuals mainly coz I’ve not been able to get Processing to talk to the midi controller and I’ve not been able to get over to the lab to spend any amount of time to work out why, until today. I essentially removed everything (Processing, all of its midi libraries and mmj), re-installed it all and it worked. Using scripts provided with the libraries I was able to list the midi controller and I knocked up a test which wrote coloured circles to the stage depending on the values obtained which you can see below. So now i just need to put it all together with my existing scripts, write some more and then do a test with the skeleton.

Video Game as a Production Tool

Posted: 15 February 2008 Filed under: Histories & Futures, Masters | Tags: gaming, hack, Masters, processing, sound Leave a commentI’ve been a bit lapse in updating my blog but here’s my thoughts and research.

I had originally been interested in exploring games as a metaphor for software development considering my love of football but the more I read, the more I became interested in video games being used as development software. I was already aware of machinima following on from my attempts at it last year but I was quite keen to explore other avenues. Something that started to appeal was a practice called sonichima; producing generative audio from playing a video game. Not being much of a gamer, I thought it would be fun to make a piece of ‘music’ now and then practice so that I became more adept at the game and then make another piece, almost like turning the game into a musical instrument. However what I discovered was that it didn’t really have much of an impact on the improvement of the sound generated.

Following on from this, I did more research and was particularly interested in Alison Mealy’s project Unreal Art and Julian Oliver and Steven Pickles’ project q3apd. They used different games but each had a common technique; that of making maps so that they had more control over the outcome. Therefore I decided that I would try the same using the level editor of Unreal Tournament. Effectively working backwards in my opinion, I worked out the locations where my bot needed to be so that the x and y coordinates logged into the system file would generate the required note in my Processing script – or at least as close as possible to be fairly recognizable. To hear the results and to loadup your own UT system log and make music, follow the link below.

Video Games as a Production Tool readme

We only come out at night

Posted: 17 October 2006 Filed under: Ramblings | Tags: processing Leave a comment

I discovered this tucked away in the exhibition section of the Processing site. As they say on their site, it’s “an urban graffiti project involving interactive public projections”. Essentially they find a site and set up hidden projectors and project these “jellies” onto a wall. If the jellies get angry, they grab the shadow of a passer-by and eats it. The next day, they move onto a different site. They’ve also set up a site to help promote and create a mythology.